Patryk Laurent, Ph.D.

Selected Projects

Below is an assortment of my projects outside of my current work.

• Brains and AI for Robots. Building robot "brains" (i.e., systems that allow robots to navigate and interact with the real world) is a way to obtain deep insight into AI problems and approaches. I co-designed and investigated a large scale state-of-the-art unsupervised neural network that learned about the world from raw continuous video (https://arxiv.org/abs/1607.06854v2). I also designed and implemented a novel iOS user interface to control and teach robots equipped with on-board machine learning software (live on-stage demo).

• Brains and AI for Home/Consumer Electronics. I led the development of a "brain in a box" that worked by monitoring Wifi and IR commands sent by users to nearby devices and learning to associate those commands with visual inputs. After several pairings, the devices could appear to react autonomously. For example, a TV could be "taught" to pause when someone stood up from the couch (patent 1, patent 2). Similarly, with this "brain in a box" nearby, remote controlled toys could be "taught" to approach target objects in view of the box's camera (patent 3, patent 4).

• Domain-Specific Language for Neural Networks. In 2012 I designed a domain-specific language called NNQL (the Neural Network Query Language). The idea was to enable researchers to describe and run neural networks using a familiar SQL-like syntax. This project was implemented as an "internal DSL" in Scala. So a user could write, directly into their code, commands like:

INSERT NEURON sigmoid INTO "L2" QUANTITY 5

PROJECT FULLY FROM NUCLEUS "L1" TO "L2" WEIGHTS N(0.1,0.1)

See the documentation available at the site for more complete examples.

• Academic research on reward, vision and attention. I spent significant time in academia investigating the interplay between reward, vision, and attention. Methods used included eye-tracking, fMRI, and behavioral response time metrics. I found that low level visual features like color or line orientations can robustly capture attention involuntarily. Reinforcement learning agents rewarded for quickly reading sentences develop eye movement patterns similar to humans. (For more on my research see my resumé or Google Scholar Profile).

• Call by Meaning. Call by meaning is a concept that allows programs or computers to interact with each other without knowing the names of functions or methods. I've implemented a proof-of-concept of this in Objective-C. So you can run commands like:

FoundMethod* found_add = [finder findMethodThatGiven:@[@3, @4] producesOutput:@7];

NSNumber* result = [finder invoke:found_add upon:@[@5, @6]]; // result will be 11

• A Minimalist Authorization Server. I was one of the leads developing The Usher, a NodeJS authorization server based on OAuth2 standards. Client applications presenting a token from a user's identity provider (like Auth0 or Azure Active Directory) would get a new token from The Usher with authorization based on roles and permissions in a PostgreSQL database. Critically, we used a simple design in which The Usher had no facility for identity at all.

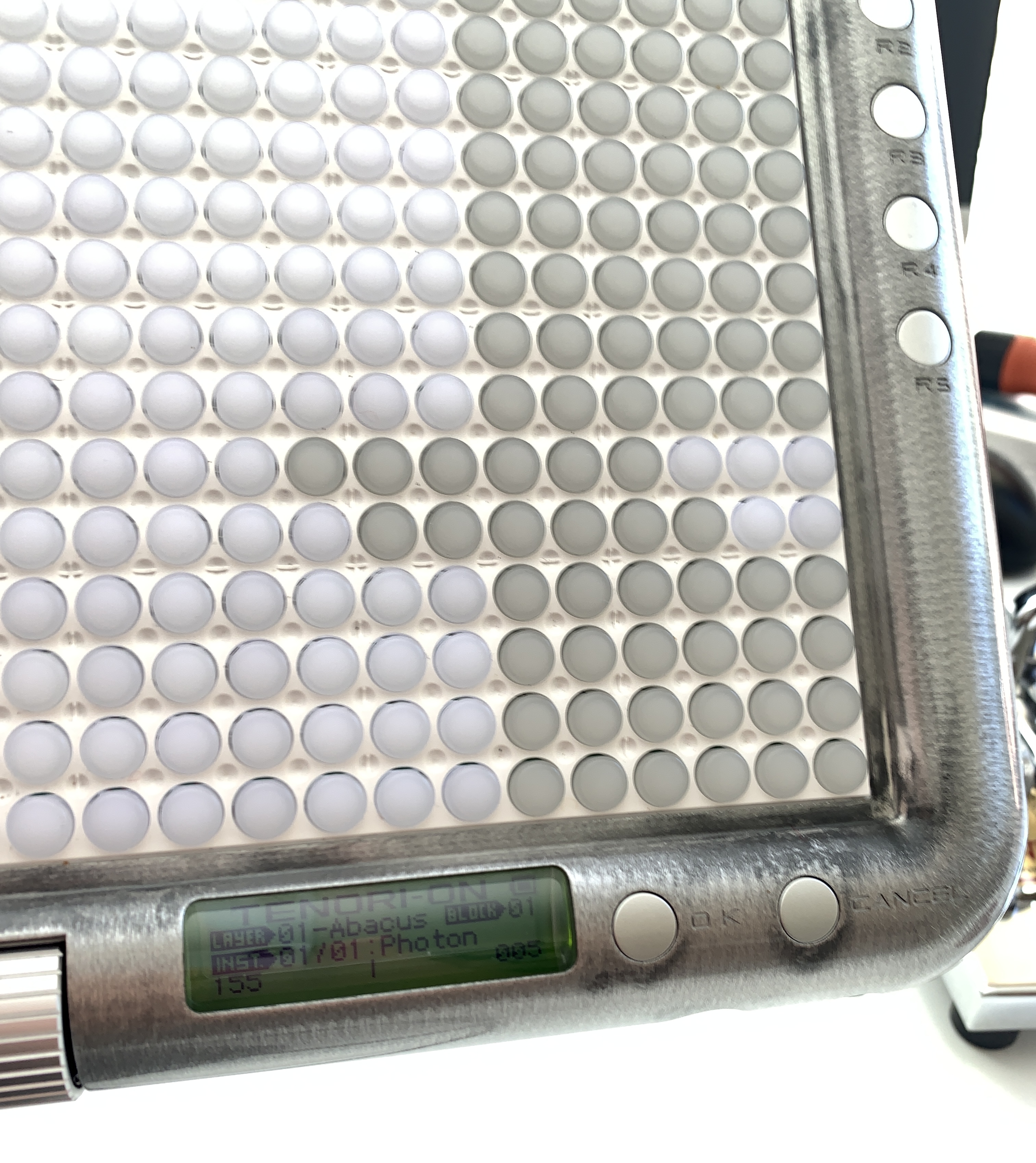

• Tenori-on Software Development. The Tenori-on is an innovative audio-visual music device designed by media artist Toshio Iwai and released by Yamaha in 2007. Thanks to guidance from pika.blue, I was able to design and implement a novel Abacus Layer type for the Tenori-on, as well as some new user interface commands (e.g., Quick Layer Copy with R1). For this project, I had to write code in Toshiba assembly language. (See Figure 1).

• Tenori-on Simulator. I am currently working on a high level Tenori-on simulator to accelerate development and testing of new operating modes on the Tenori-on. The assembly language interpreter is written in Scala using parser combinators. (See Figure 2).

Figure 1. The new Abacus Layer I implemented on the Tenori-on in Toshiba assembly language (2020). Although fun to play directly, Abacus was an iterative step towards a tape-delay echo layer. When targeted by another layer, beads move one by one, fading out. |

Figure 2 (video). A quick peek at a high level simulator Tenori-on simulator that I am working on. The idea is to make it faster to iterate while developing new functionality, instead of having to continuously flash new firmware to the device. |

• iOS Music Apps. In the early days of the app store, I created several music apps, two of which were publicly released (PaklSound1 and Bossa Nova; see Figures 3 & 4). I have not had enough time to keep these apps updated, so they are no longer currently available. I am considering releasing them as open source.

Figure 3. PaklSound1 was a simple sequencer app available very early on in the iOS App Store. Figure 4 (video). Bossa Nova for iPad was released later.